Speech Recognition in Unity

Introduction

In this exploration I wanted to test out using my voice as an input. After a bit of research I determined that I would try two different methods of implementation: using an online ai api call to convert a voice clip to text, and using the Microsoft phrase recognizer. Both methods had their pros and cons for usage and implementation.

The goal of this project was to have a system that used my voice command to cast a corresponding spell in game. I wanted to base this system off the many wizards and spellcasters in games and movies. I’ve always enjoyed when authors put extra effort into fleshing out their magic system and making each spell have its own unique chant/incantation.

GitHubImplementation 1

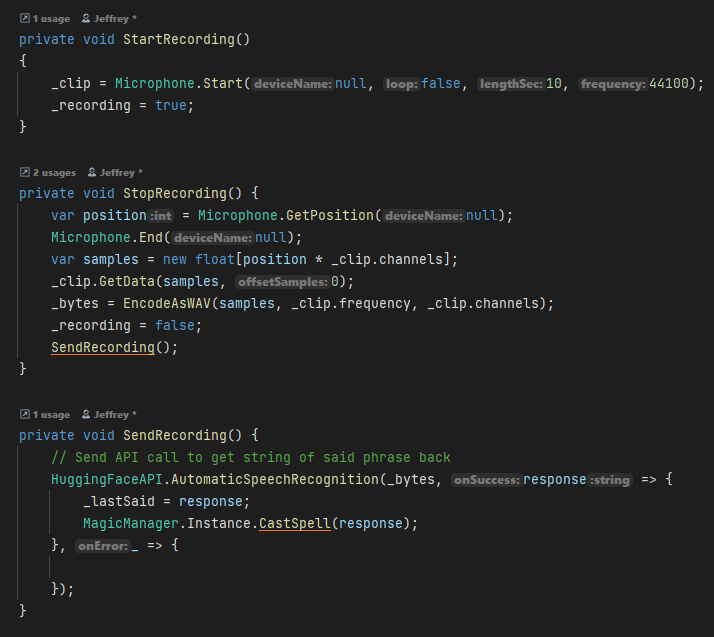

The first implementation of this system used a combination of an online AI, HuggingFace, to turn a wav file into a string and Levenshtein’s distance to determine which incantation was said.

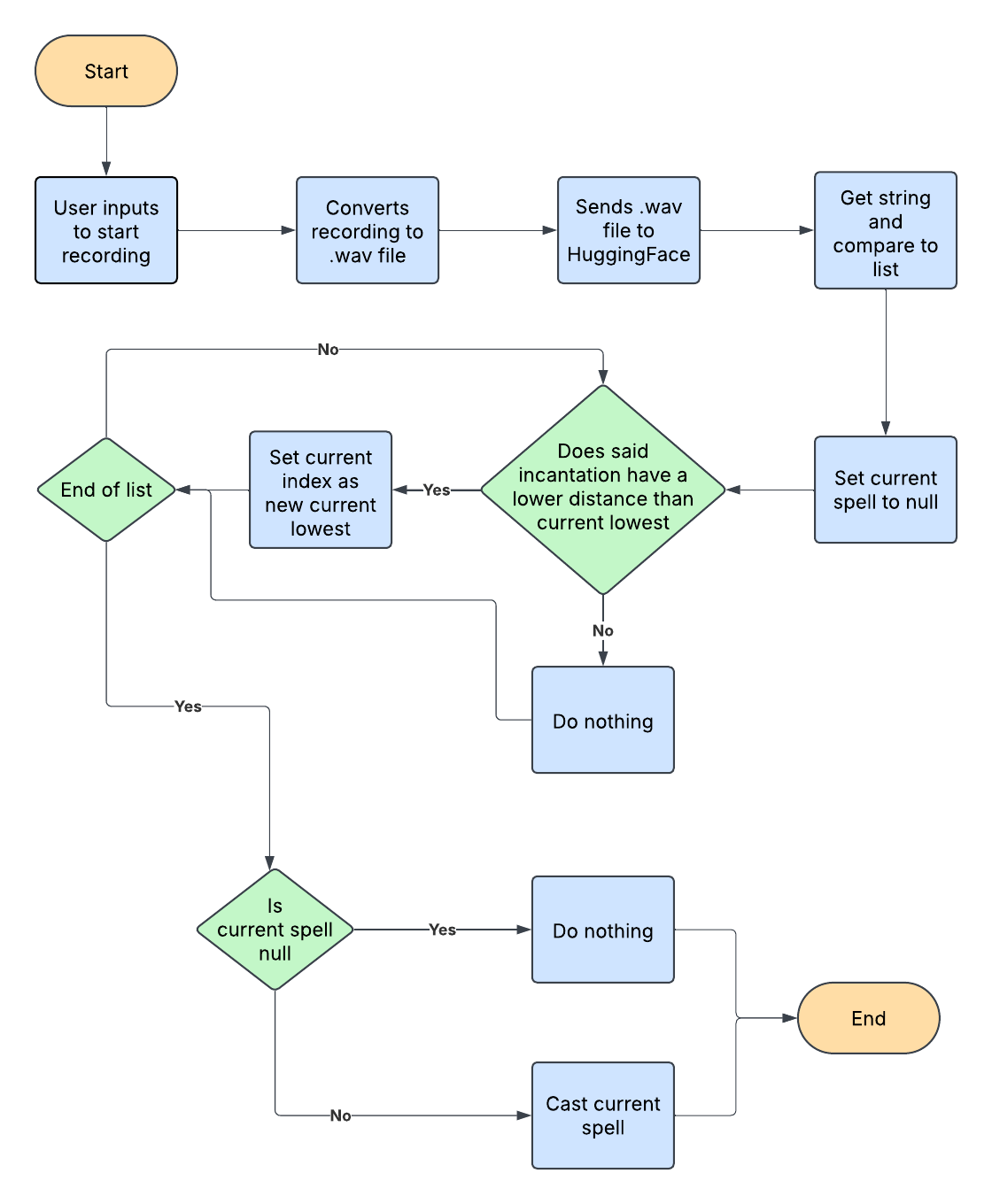

The first step to setting up this system is getting a voice recording from the user and getting a string of what the user said. For this I used a HuggingFace API call to get back a string from a wav file. So the steps are: get voice recording, convert file to a .wav file, send .wav file to HuggingFace to convert to string, then return the result from HuggingFace.

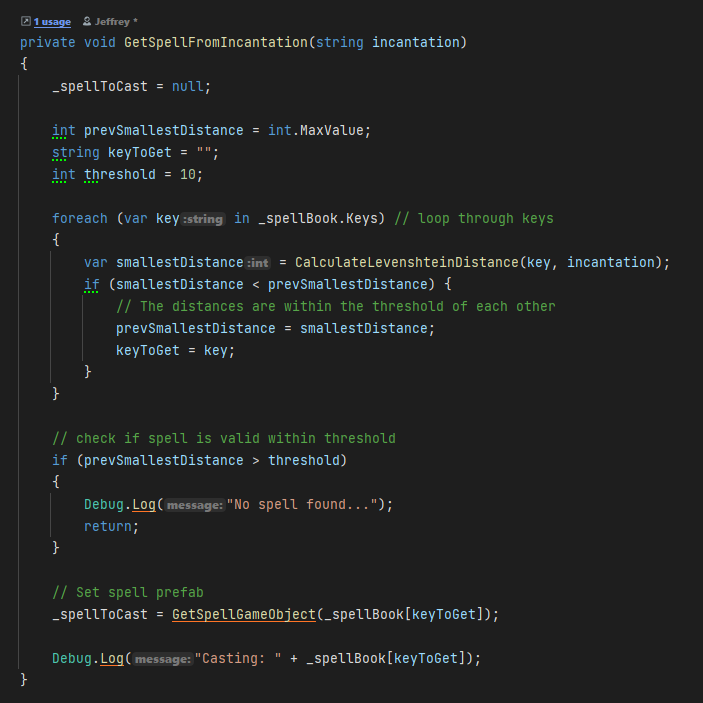

The next step is to determine which incantation was said with this string. With the string we got from HuggingFace we can compare it to a list of other strings with the correct incantations and pick the one that matches the most. To find a matching string we can use Levenshtein distance to check for the best match among all incantations. In short Levenshtein distance compares two strings and will return a value; the lower the value the closer the strings are to matching

In this example we have the dictionary of incantations and the spell name. When we cast a spell it will get the string of what we said and compare it to this dictionary and return the spell name. Then in a separate function it will cast the spell and do its effects.

To start comparing we first need to set the current spell to null so if none of the incantations match enough then we do nothing. Next we can set a threshold for the error tolerance. This threshold is used to allow some error for when the user may be slightly off in the incantations and any small errors HuggingFace may have returned. You can change the threshold to allow the said incantation to match the true incantation less. Keeping the threshold low will make it so the user doesn’t just say anything close to the incantation.

Here is a demo of the spell casting working

Implementation 2

The second implementation of this system used the Microsoft phrase recognizer in Unity to get audio and compare them to a list of phrases/words.

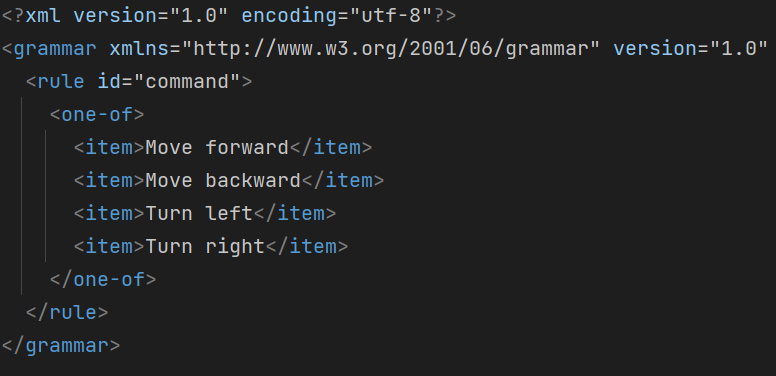

To set up our system we first need to define what phrases should be recognized. To do this we create and .xml file and put all our phrases into it like this:

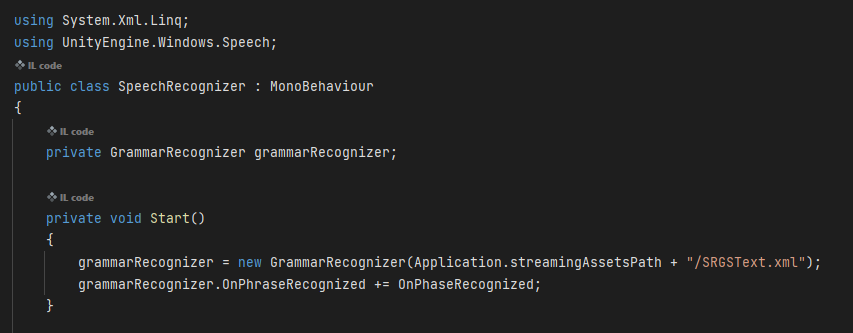

Next we need to reference the Microsoft speech module in our script at the top. Now we can set up our functions and grammar recognizer. First we need to create a variable at the top of the type GrammarRecognizer. Then in the start method we can initialize it. Now we need to create a function called "OnPhaseRecognized". This function will be called when a phrase that is in our XML file is said. With this function we now want to bind the event of OnPhraseRecognized in Grammar Recognizer to this event.

Now that we set up our phrases and grammar recognizers we can add some logic in the update to start listening when the user presses the button and start trying out the speech recognition.

Pros and Cons

For the first implementation method, HuggingFace API + Levenshtein distance, it is more flexible since we can adjust the threshold and allow more user error. The main con for the implementation is it is reliant on an API call which cannot work without an internet connection.

The pros of the second implementation method is it is much easier to get started compared to using the other method. The cons is that you are limited to what phrases you say and the system will not tolerate it if the user says anything else. Although this voice recognition is very accurate since it is supported by Microsoft there is still room for error. This voice recognition technology is used in Microsoft features such as Cortona.

What I Learned

In this exploration I learned more about using different inputs for Unity. In the future I would love to further explore this concept and possibly make a game with voice as the main input action. This exploration also required a lot of digging since the documentation on the Microsoft grammar recognizers was not easily accessible. I had a lot of fun stringing together multiple techniques to make the voice input work.